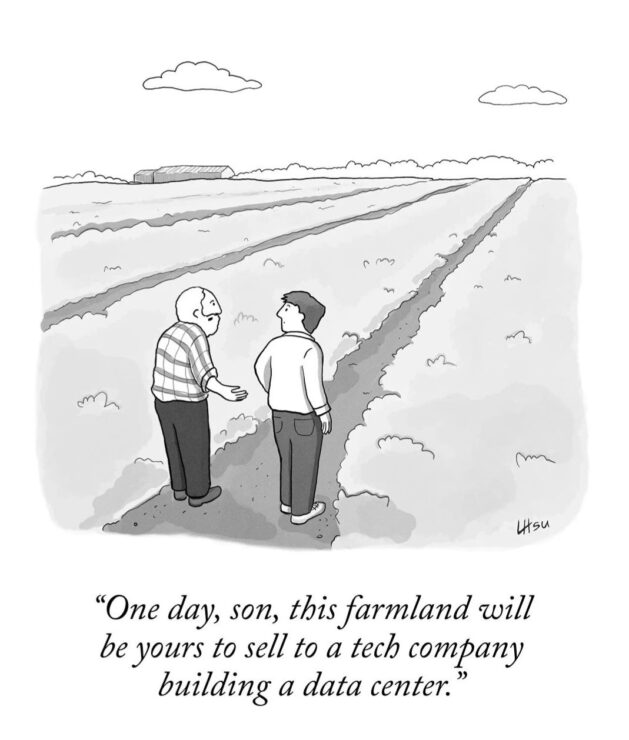

The recent picture above by Lynn Hsu in The New Yorker magazine depicts a lost opportunity for me.

When I moved to the Midwest nearly 30 years ago, I should have bought some farmland that I could now sell to BigTech for big money to house their AI data centers!

Of course, tech companies are making massive AI infrastructure investments during this AI era to the tune of many billions of dollars.

These big investments are a sure sign that AI is going to be more pervasive in a world in which technology is playing a bigger role in our lives.

While we are still very much in the infancy of AI, I’m excited about the growing intersection of AI and the law.

We are starting to see law firms develop their own AI practice groups, corporate legal departments have their own AI teams and we are seeing law schools teaching AI Law classes.

What I love about AI Law is that it’s multi-disciplinary in nature, it applies to virtually all lawyers and it cuts across various legal practice areas such as the following:

🤖 Employment Law: As humans are increasing replaced in the workplace by AI solutions, this area of the law will become increasingly busy.

🤖 Ethics: AI needs to be created and used in an ethical and responsible manner. Lawyers will also have growing legal ethical obligations regarding their usage of AI tools for their clients.

🤖 Intellectual Property: Our courts will provide increased clarity regarding the interplay between AI and copyright law protection. Companies creating AI solutions continue to seek patent protections for their novel ideas and inventions.

🤖 Data Privacy: Properly protecting the massive amounts of data used in conjunction with AI models is a growing concern for everyone.

🤖 Regulatory: The AI regulatory landscape is changing as we speak and our laws cannot keep pace with the fast pace of AI advancement.

🤖 Sustainability: As more data centers are provisioned across the globe with carbon emissions, how do we continue to protect our environment?

🤖 Cybercrime: As AI advances, the bad actors have more tools to commit cybercrime, fraud and deep fakes.

🤖 Competition Law: While there’s lots of AI solutions available in the marketplace, how do our laws continue to protect consumers and preserve competition?

🤖 Legal Operations: Lawyers will increasingly use AI tools to save time, be more productive and to deliver higher value legal services to their clients.

The AI era is an exciting time for lawyers as we have big opportunities to help our clients navigate our growing AI world and to use leading AI solutions to better serve our clients.